I consider myself to be a pragmatic fan of test-driven development (TDD), writing maybe 30-50% of my code using the technique.

Having done it in four different tech stacks over the past few years, I look forward to TDD a lot more in some of them than in others.

Let’s compare a few of the experiences to see what they get right and what they don’t, from best to worst:

- .Net: xUnit and NCrunch

- JS/TS: Jest and VS Code Jest extension

- Python: pytest

- Java: IntelliJ and Infinitest

What makes a good TDD experience

The main difference between test-driven development and regular development is:

- You write a lot more unit tests,

- You run unit tests more often,

- You debug the unit test failures more often.

So if the tech stack’s tools and libraries are better set up to support these, then the TDD process will be more pleasant.

The winner: .Net

NCrunch

The main star of the .Net TDD ecosystem is NCrunch add-in for Visual Studio. The best description of it is this animation from its landing page (unfortunately it looks best on desktops and tablets):

In it we see a few things:

- NCrunch runs continuously as you type.

- The colored dots on the left of each line lets you know whether the line is covered or not.

- The same dots go red when the tests covering the line fail.

- Dots turn to a red “X” when that line throws an exception. Can hover over the “X” to see the exception.

- Context menu on each line allows to run the test and break at that line.

As a result:

- Tests run continuously, with very fast feedback,

- Feedback is given in the context of the code you’re looking at,

- Debugging is very easy and is often unnecessary.

The overall experience is very smooth with most of the time spent on writing the tests and the code, and very little on debugging or troubleshooting the tools.

The downside is the price. Like with many other tools for the Microsoft stack, NCrunch is proprietary with a $159 one-time fee per named user, though in my experience it is totally worth it.

xUnit

The other outstanding element of the .Net ecosystem is xUnit test framework. Similar to other _Unit frameworks, it makes one subtly different choice that makes life easier: it makes a new instance of the test class for each test. Aside from saving a bit of typing (“before-each” functions are replaced with constructors), it ensures that the mess left by one test function does not impact another test function, which eliminates a class of test issues.

Finally, both major .Net test frameworks - NUnit and xUnit - are in a rare group of frameworks that provide an easy way to write in-line test cases like this:

1 | public class SomeTests |

Pytest does a good job with this as well. Jest doesn’t, but at least the flexibility of JavaScript lets you improvise something. Java’s JUnit 5 introduced something similar with parameterized tests, but its ergonomics is very poor due to JVM’s quirks.

Downsides

Moq is a nice mocking framework, but it can mock only what it can override, which in some cases is not a lot. For this reason, mocking many things in ASP.NET and the rest of the .Net Framework is not straightforward. This has been gradually getting better in .Net Core.

In addition, though C# syntax has evolved a lot over the years, its types sometimes force you to write pretty contrived expressions.

Putting it all together

The end result is that in .Net my experience writing tests has more “Oh, you can do that?” and less “WTF happened just now?” than in other tech stacks, which is one of the reasons I chose it my personal projects.

Runner-up: JS/TS, Jest, VS Code

So how does working with Jest compare to xUnit/NCrunch combo above? Let’s write something.

1 | describe("Submit button tests", () => { |

The awesome:

- Writing simple tests is super simple. Just write the

test()function. - Test names can be proper English sentences. Great for readability!

describe()lets us group test suites and sub-suites. And these can also have nice, readable names!- The power of JS lets us mock anything. Don’t like interfaces? Then don’t use them, mocking still works! (But I like them anyway)

The downsides:

- Have to improvise parametrized tests with a

.forEach(() => {test(...)}). It works, but it requires a bit more thinking every time. - Test functions can interfere with each other. You learn quickly to not do the stupid things that cause that, but again, it adds a bit of mental overhead.

Running and debugging the tests involves a bit of friction. You have two options for running the tests continuously: (1) jest --watch and (2) VS Code Jest extension.

The first option works, and it will give you regular feedback when you break or pass the tests. It’ll point you to the offending line too. But the debugging has to be done through console.log(), and the test runs can take a while even on modest codebases. It also occupies additional real estate as a separate terminal window, which is not terrible, but not fantastic either, especially on a laptop.

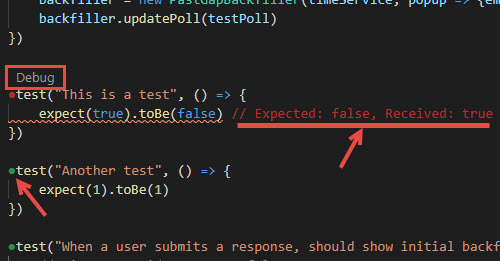

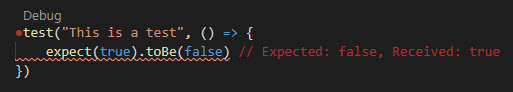

The second option, Jest VS Code extension, integrates the test results with the IDE and gives a handy “Debug” link above each test. Clicking that link launches VS Code debugger.

The debugger integration is quite solid, but the rest is not. After saving the file (and remember, in NCrunch we didn’t have to do that), you have to be a bit patient and let the extension catch up. After a few hours of work the extension inevitably crashes, and will not start up again until VS Code reloads the folder.

Alltogether, this leads to a continuous testing experience that’s workable, but not the best.

Python’s pytest: probably the second place too

My experience with Python is not as extensive as with the other three languages on the list, but using VS Code and Pytest seems to have a similar experience to jest --watch.

Pytest framework itself is a bit more pleasant, and mocking seems a bit easier. The downside is no static types, which means less help from the IDE. Also, there seems to be more limited IDE integration for Python unit testing in general.

The laggard: Java, IntelliJ

Finally, let’s write and run some tests using Java, JUnit 5, IntelliJ, and Infinitest.

Right off the bat, you can run into problems with @Test annotation and other JUnit API elements. They exist in both org.junit (JUnit 4) and org.junit.jupiter.api (JUnit 5) packages. If you’re using Spring Boot, you probably somehow have both, and it’s easy to accidentally import the wrong one and spend half an hour troubleshooting the resulting problems (and then helping every new coworker who accidentally does that). After two years with JUnit 5, I still repeat that mistake.

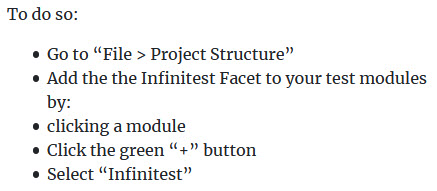

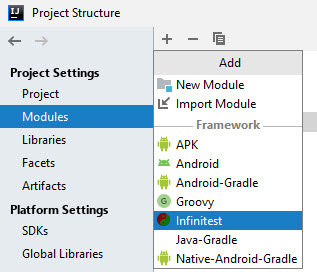

The second problem we run into is enabling continuous testing. In theory, Infinitest and IntelliJ should function seamlessly together: just install Infinitest, add it to the project, enable the continuous builds, and done. That’s the experience with NCrunch and Jest VS Code extension.

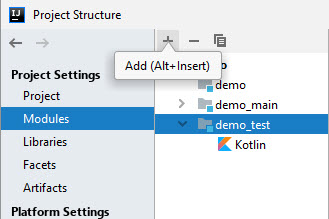

In practice though, something always goes wrong. Just now, for this blog post, I tried to set up a new project with Infinitest. After already doing this a couple times in the past few years, it took me about five minutes just to translate these instructions from the Infinitest website:

into these steps:

due to the different places where you can look at the facets and find a “+” button that has an “Infinitest” option. It still didn’t run.

In any case, in the past I had to stop running Infinitest because the continuous IntelliJ builds were giving me trouble; ultimately, it was easier to just run the tests manually.

There are also a bunch of other minor frictions, like the “command line too long” error on Windows, or an uncomfortable interface for parametrizing the unit tests.

To be fair, there are some bright spots. Running and debugging the tests is pretty smooth, and I can use the context menu to debug a specific test.

Or this one if you’re using Kotlin:

1 | fun `That's valid Kotlin function name`() { |

Which is great for writing descriptive test names.

But overall, TDD is noticeably less pleasant in Java than in other tech stacks.