TL;DR: After the analysis in this post, I started using SLANT PRICE DOUGH BALMY, but it may not be the best sequence for you.

There are lots of great videos and articles on the optimal Wordle strategy. I particularly enjoyed this YouTube video by 3Blue1Brown on using the information theory to come up with the best guesses.

But I can’t play like that. First, I’m lazy, and second, my slow human brain can’t do information theory calculations or depth-first searches.

Instead, every time I play Wordle, I enter the same sequence of words until it feels like there aren’t many solutions left, at which point I try to make more tailored guesses.

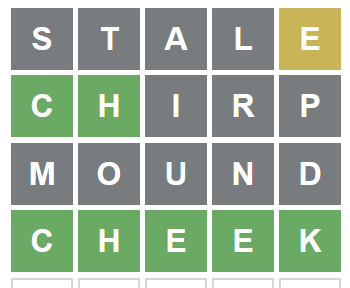

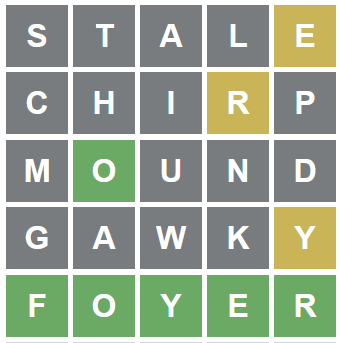

Here are a couple examples of my recent games:

Until today, my go-to words were STALE, CHIRP, MOUND, and GAWKY, picked mostly by gut feel while trying to check as many frequently used letters as possible. So far I’ve been doing alright with them.

But can we do better?

Yes! Let’s use math and programming to do better.

Though to do computer science, we’ll need a more precise algorithm than “play these words until it feels like there aren’t many solutions left”. Let’s go with this:

- Pick four words.

- In every game, keep guessing these words in order.

- If there are 3 or fewer possible solutions left, make tailored guesses that minimize the number of turns to victory.

Oh, and let’s use only the list of 2309 valid solution words (heplfully provided in Wordle’s JavaScript source code), and ignore the larger list of valid guesses. In part, that’s to make the calculations simpler, and in part because you’ll forget that THEWY is an acceptable guess by the time you’ll finish reading this sentence.

Pick words that produce fewest remaining possible solutions?

That was the first thing I tried.

For the first word, I picked one that resulted in the lowest remaining information entropy (basically, fewest remaining options) on average across any of the 2309 valid solutions. That turned out to be RAISE.

Then, I picked the next word that gave me (on average, across all solutions) the most information after guessing RAISE. That turned out to be CLOTH. And so on, getting DUMPY and BEGAN as the best third and fourth words.

(Along the way, I had to keep in mind that I had to switch to “smart” guesses if I had 3 or fewer valid solutions left.)

Here’s what each word contributed:

| Guess | Ave. remaining entropy (bits) | Ave. options remaining | Worst case options remaining |

|---|---|---|---|

RAISE |

5.2 | 17.5 | 167 |

CLOTH |

1.8 | 2.38 | 30 |

DUMPY |

0.8 | 1.53 | 6 |

BEGAN |

0.4 | 1.28 | 4 |

But how does it compare to the original STALE>CHIRP>MOUND>GAWKY? If we simulate all 2309 possible games we see that:

RAISECLOTHDUMPYBEGANwins all games and uses 3.89 guesses on average.- My original

STALECHIRPMOUNDGAWKYwins all games and uses 3.84 (!) guesses on average.

😲 ⁉️ My original gut-feel word choices did better?

Because each word being optimal ≠ the full set is optimal

So it turns out it’s not enough to optimize each word; instead, we have to jointly optimize the entire sequence WORD1 WORD2 WORD3 WORD4 across all possible word sequences.

But that’s a lot of sequences! I’m not sure how much exactly 23094 is, but it’s definitely more than my computer can handle. Even doing some intelligent pruning (e.g. ‘a letter must not repeat in the first 3 words’) still leaves a lot of options to evaluate. What else can we do to cut it down?

What I ended up doing was ranking all words by the number of bits of information that they provide, picking the top 10-100 words at each position for a total of ~160,000 four-word combinations, and then simulating all possible games for each sequence.

After two days at 100% CPU, my computer gave me the answer:

SLANTPRICEDOUGHBALMY: 3.79991 average guesses to win.

But there were a lot of other sequences that produced similar results. For example #2 sequence was CRANE SPILT DOUGH BALMY that took on average 3.8008 guesses to win.

But what if I’m a smart and tailor my guesses sooner?

Or, to be less flippant, you can often get into situations where there are more than three guesses left, but you can easily enumerate them. The simplest example is if your first guess SHARE results in something like SHARE.

So let’s say that we start making tailored guesses when there are five possible solutions left instead of three. How does this change our optimal sequence?

After another day at 100% CPU, I ended up with TRICE SALON DUMPY GLOBE, which solved the puzzle in 3.72 steps on average.

But SLANT PRICE DOUGH BALMY wasn’t far behind, at 3.74 average guesses, and even the original STALE CHIRP MOUND GAWKY clocked in at respectable 3.79 guesses.

But what if the word list changes?

That’s a good question. It wouldn’t do to have a set of words that’s hyper-optimized for Wordle but not other similar puzzles.

So I took the list of words ranked by their occurrence in Google Books, and selected top 3000 five-letter words.

Redoing the same analysis here gives us a new winner: SAINT BORED CLUMP FIGHT at 3.91 guesses to win, though again, SLANT PRICE DOUGH BALMY is not far behind with 3.96 guesses (athough with 99.93% win rate), and even my original STALE CHIRP MOUND GAWKY comes in at a not-terrible 3.98 average guesses and 99.90% win rate.

So what’s the lesson?

So it turns out that the most optimal sequence really depends on how you play the game and which Worlde-like game you play.

But if you want a good-enough sequence, it looks like you can pick any three words that cover the most frequently used letters, and then a fourth word that complements the other three well.

Personally, I think I’ll try SLANT PRICE DOUGH BALMY for the next while.